How to Upload File in S3 Bucket Using Python

How to Upload And Download Files From AWS S3 Using Python (2022)

Acquire how to use deject resource in your Python scripts

I am writing this post out of sheer frustration.

Every post I've read on this topic causeless that I already had an business relationship in AWS, an S3 bucket, and a mound of stored data. They only testify the lawmaking but kindly shadow over the most of import part — making the lawmaking work through your AWS account.

Well, I could've figured out the code easily, thank yous very much. I had to sift through many SO threads and the AWS docs to go rid of every nasty hallmark error forth the way.

And so that you won't feel the same and practise the hard work, I will share all the technicalities of managing an S3 bucket programmatically, right from account creation to adding permissions to your local auto to access your AWS resources.

Step 1: Setup an account

Correct, let's start with creating your AWS business relationship if you haven't already. Nothing unusual, but follow the steps from this link:

Then, we will get to the AWS IAM (Identity and Access Management) panel, where we will exist doing most of the work.

You can easily switch betwixt different AWS servers, create users, add policies, and permit admission to your user account from the console. We will do each one past ane.

Step 2: Create a user

For i AWS account, you can create multiple users, and each user tin have various levels of access to your account'due south resources. Let's create a sample user for this tutorial:

In the IAM console:

- Go to the Users tab.

- Click on Add users.

- Enter a username in the field.

- Tick the "Access key — Programmatic access field" (essential).

- Click "Next" and "Attach existing policies direct."

- Tick the "AdministratorAccess" policy.

- Click "Side by side" until you meet the "Create user" push button

- Finally, download the given CSV file of your user'southward credentials.

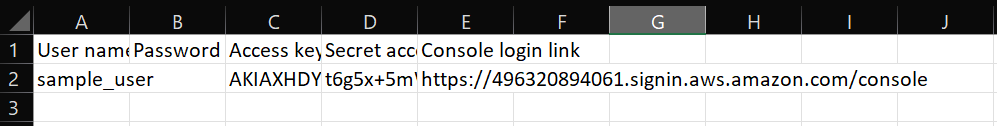

It should look like this:

Shop it somewhere safe because we will be using the credentials after.

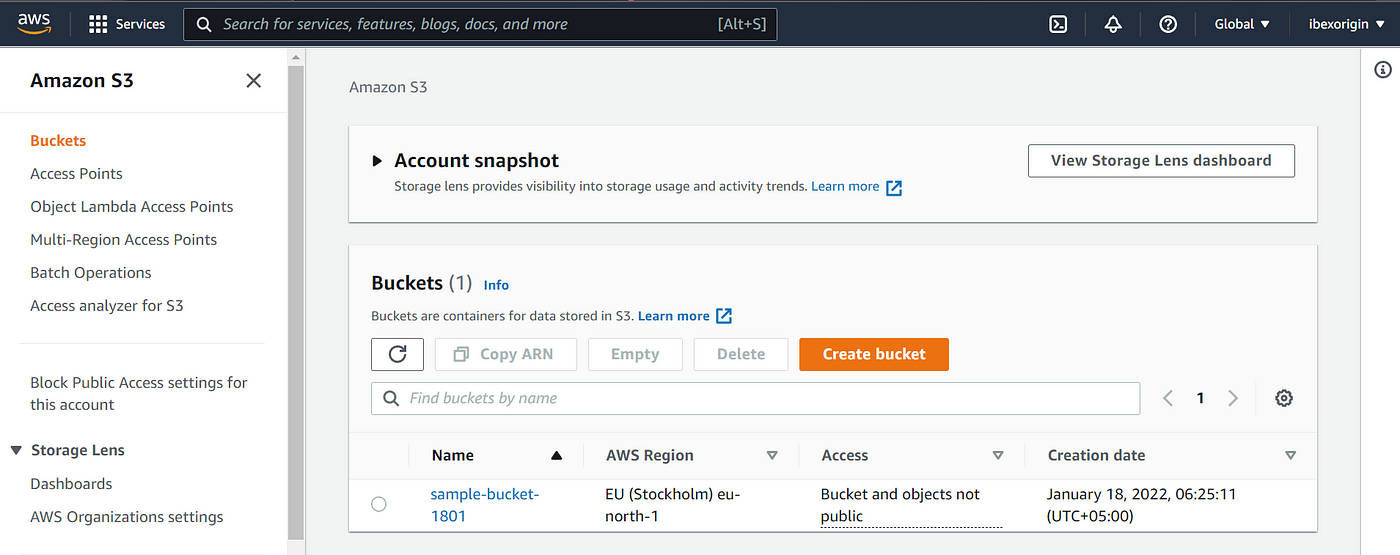

Step 3: Create a bucket

Now, allow's create an S3 bucket where we can store data.

In the IAM console:

- Click services in the top left corner.

- Ringlet downwardly to storage and select S3 from the right-mitt listing.

- Click "Create bucket" and give information technology a name.

You can choose any region y'all want. Leave the residual of the settings and click "Create bucket" once more.

Step four: Create a policy and add together information technology to your user

In AWS, access is managed through policies. A policy can be a set of settings or a JSON file fastened to an AWS object (user, resource, grouping, roles), and it controls what aspects of the object you tin use.

Below, we will create a policy that enables us to interact with our bucket programmatically — i.due east., through the CLI or in a script.

In the IAM console:

- Become to the Policies tab and click "Create a policy."

- Click the "JSON" tab and insert the code beneath:

replacing your-saucepan-name with your ain. If you pay attending, in the Action field of the JSON, we are putting s3:* to allow any interaction to our bucket. This is very wide, so you may only allow specific deportment. In that case, check out this folio of the AWS docs to larn to limit access.

This policy is only attached to the bucket, and we should connect information technology to the user as well and so that your API credentials work correctly. Here are the instructions:

In the IAM panel:

- Go to the Users tab and click on the user we created in the terminal section.

- Click the "Add permissions" button.

- Click the "Attach existing policies" tab.

- Filter them past the policy nosotros but created.

- Tick the policy, review it and click "Add" the final time.

Stride 5: Download AWS CLI and configure your user

We download the AWS control-line tool because it makes authentication so much easier. Kindly go to this folio and download the executable for your platform:

Run the executable and reopen any active terminal sessions to let the changes take effect. So, type aws configure:

Insert your AWS Key ID and Hugger-mugger Access Key, along with the region you created your bucket in (use the CSV file). You tin can find the region name of your bucket on the S3 page of the panel:

Merely click "Enter" when yous reach the Default Output Format field in the configuration. At that place won't be whatsoever output.

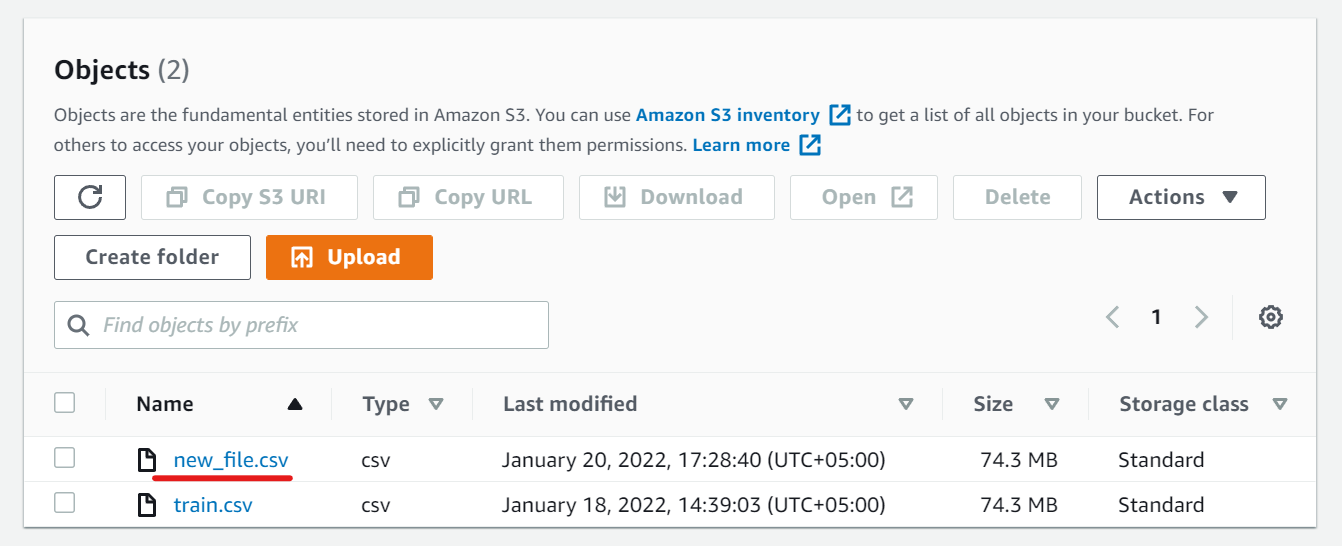

Step vi: Upload your files

We are nearly in that location.

Now, we upload a sample dataset to our bucket then that nosotros tin can download it in a script subsequently:

Information technology should be easy once yous get to the S3 folio and open your bucket.

Pace 7: Bank check if hallmark is working

Finally, pip install the Boto3 package and run this snippet:

If the output contains your saucepan proper noun(s), congratulations — yous now have full access to many AWS services through boto3, not just S3.

Using Python Boto3 to download files from the S3 bucket

With the Boto3 package, you have programmatic access to many AWS services such as SQS, EC2, SES, and many aspects of the IAM console.

Notwithstanding, equally a regular data scientist, you volition more often than not need to upload and download data from an S3 saucepan, then we will only cover those operations.

Permit's start with the download. Later importing the package, create an S3 class using the customer part:

To download a file from an S3 saucepan and immediately relieve it, we tin apply the download_file office:

There won't be any output if the download is successful. You should pass the exact file path of the file to be downloaded to the Fundamental parameter. The Filename should contain the laissez passer yous want to save the file to.

Uploading is also very straightforward:

The function is upload_file and you simply have to change the order of the parameters from the download office.

Conclusion

I suggest reading the Boto3 docs for more advanced examples of managing your AWS resources. Information technology covers services other than S3 and contains code recipes for the most common tasks with each 1.

Thanks for reading!

You can become a premium Medium fellow member using the link below and get access to all of my stories and thousands of others:

Or just subscribe to my email list:

You tin can reach out to me on LinkedIn or Twitter for a friendly chat about all things data. Or you can just read another story from me. How about these:

boothegrapinglies.blogspot.com

Source: https://towardsdatascience.com/how-to-upload-and-download-files-from-aws-s3-using-python-2022-4c9b787b15f2?source=post_internal_links---------6-------------------------------

0 Response to "How to Upload File in S3 Bucket Using Python"

Post a Comment